How to Create Reliable Acceptance Testing Systems

(Circle of LabVIEW: Part 5 of 6)

This is Circle of LabVIEW: Acceptance Testing, the fifth of a series of blog posts that describe different stages of the LabVIEW project life cycle from idea to market. Although reading them in order will help understanding the bigger picture, each blog post is self-contained with a small section regarding the "RT Installer," a kind of sample product used in this series.

This blog post tackles what happens once principal development is done. It describes the process we use to verify that each of the requirements has been implemented and is functionally correct as dictated by the scope.

Acceptance testing can happen during principal development when functionality milestones are met or toward the end of the development process when most of the application is complete. For most projects, formal verification - not just spot-checking functionality - is best done at the end of the development lifecycle. Some functionality, especially tightly coupled systems or recipe control, is ONLY testable once multiple portions of the application are complete.

Requirement verification is a somewhat organic process. It is often run with the client and developer side by side. Acceptance testing is aided by the following documents:

- System Acceptance Test

- System Test Descriptions

- Requirements Traceability Matrix

- System Requirements Specification

Test descriptions provide a collection of scenarios that walk through different processes which cover specific requirements. A single test description may cover one or more requirements, while other test descriptions may also test the same or different requirements in different ways.

The method we use to visualize this requirements coverage is by use of a requirements traceability matrix; this document allows you to see what test descriptions cover what requirements and the overlap between tests. Because of developer bias when creating test descriptions, we generally have developers draft them and the client will approve or modify them. Developer bias has the effect of creating a test description that’s more likely to pass due to how the application is written rather than the process it’s performing.

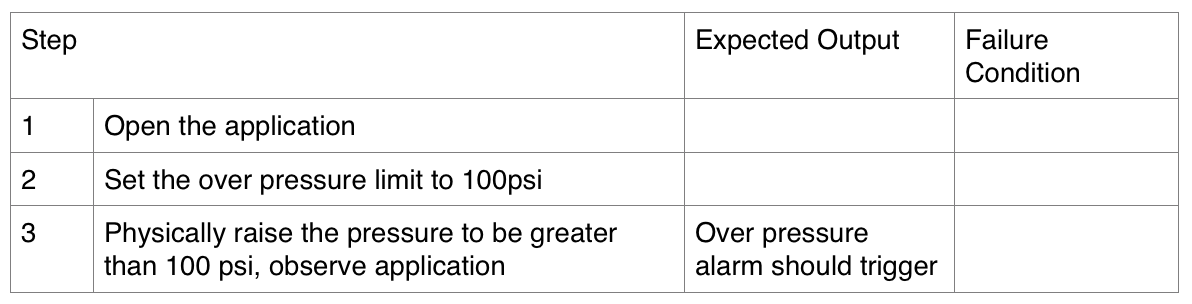

The formal acceptance process utilizes the "Test Execution Worksheets" included in the System Acceptance Test Document. This document allows us to communicate whether a test description passed and make notes. For example, the requirement provided earlier:

If pressure (PT-001) is greater than the over pressure limit (LIM-001), software will trigger over-pressure alarm (ALM-001).

A test description for this requirement could look like:

If this test description completes successfully, the requirement has been met.

Alternatively, the worksheet allows conditional passes. For example, if the over pressure alarm triggers, but there isn’t an audible indicator of over-pressure, the client could make the argument that the requirement "passed with exception," saying that for this feature to be "fully functional" it needs a klaxon. This would be a new feature and whether it was in scope would be debatable.

Also, the client could cycle the pressure above and below 100 psi, noting that the alarm would de-activate and re-activate (i.e. not latch) and identify it as a bug, saying that it failed. Once test descriptions are passed, they don’t need to be executed again unless changes were made to a part of the application that is included in the requirements that the test description covers.

A successfully completed SAT is a collection of Test Execution Worksheets (TEWs) where each test description has been executed and passed without exception. Each completed SAT provides developers with final bugs and features to implement to successfully pass the SAT.

Once principal development for the "RT Installer" was complete, we ran the SAT internally after developing the appropriate documents. Due to some configuration issues, we ran 4 SATs and developed a bug/feature list, some of which we implemented during the SAT process and some we left for later. Related documents are as follows:

- See the System Acceptance Test Document for the RT Installer.

- See the System Test Descriptions for the RT Installer.

- See the Requirements Traceability Matrix for the RT Installer

- See Test Execution Worksheet #1 (RT Installer)

- See Test Execution Worksheet #2 (RT Installer)

- See Test Execution Worksheet #3 (RT Installer)

- See Test Execution Worksheet #4 (RT Installer)

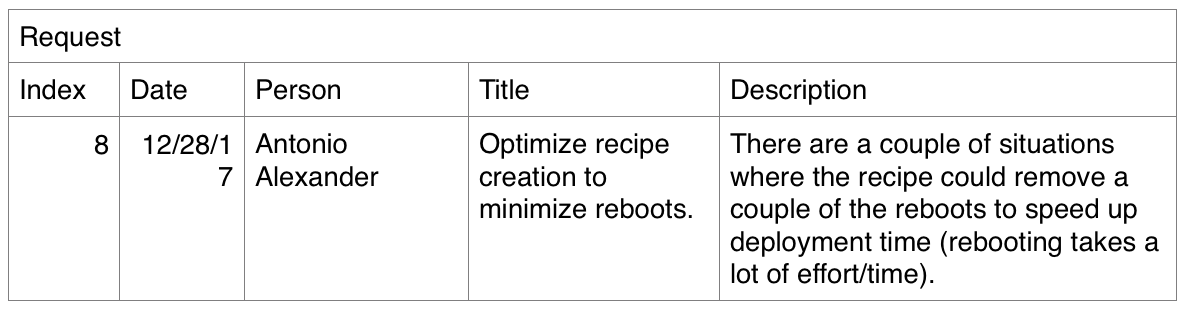

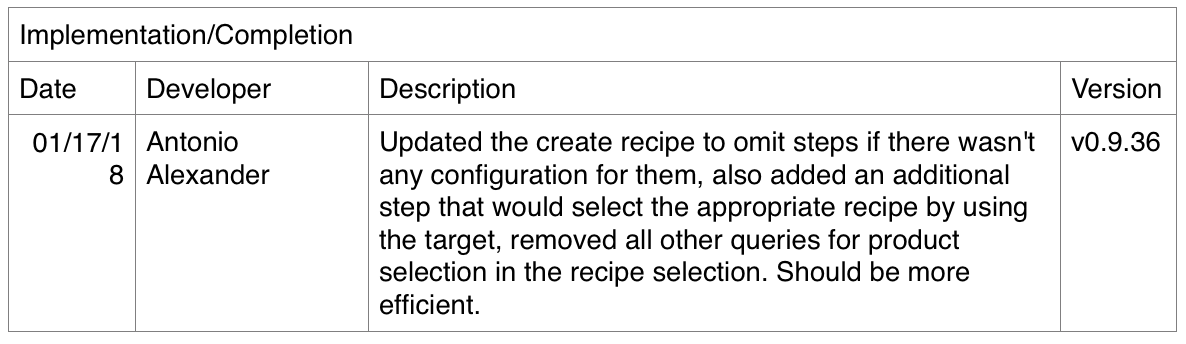

During acceptance testing, we noticed that the automatic deployment took longer than expected. As seen in the source status feature #8, I thought that the automatic deployment process could be optimized by minimizing the reboots. You can see before-and-after videos here as well as the information from the source status spreadsheet.

(Before feature #8)

(After feature #8 implemented)

The fix allowed a 25% reduction in automatic deployment time (12m vs 16m) with the exact same configuration.