Thoughts On Testing

Unit Testing

The notion of Unit Testing is a noble cause for sure. And it’s even better if you have a framework that formalizes the testing interface and can be run as part of the build validation process.

Sadly, once a project is up running for sure, unit tests are typically not maintained. Interfaces change, tests wont build, they get excluded from the build process.

And then one day, when someone wants to run the tests, they may not build, their success/failure conditions have changed or been relaxed as a result of new requirements.

It is likely that unless there is a team dedicated to QA/testing, unit testing will be seen as less important as other project pressures mount.

Functional Block Testing

I tend to view unit tests as early functional tests that are used to validate the more complex components in isolation before they are integrated. This approach can be justified in projects that are starting to be moderately complex, even if they are ultimately view as one-off components. I would argue that these components that are complex enough to be independently validated are worth this testing before the integration phase. Testing at this point will help formalize and validate interfaces for said components. Testing at this level will make integration testing flow more smoothly

I would argue that these component tests should be viewed as simulations of the environment the component will run in rather than static tests.

Simulations

Candidates for simulations range from internal components of moderate complexity to external components.

Internal Components

Internal components are relatively easy to simulate especially if you have a well-defined interface contract that can easily abstracted. You can then start with stubbed out implementations that may not do anything. The actual component can then be tested in isolation and the simulated component can be used for added instrumentation and diagnostics while it can also provide arbitrarily complex behavior for testing.

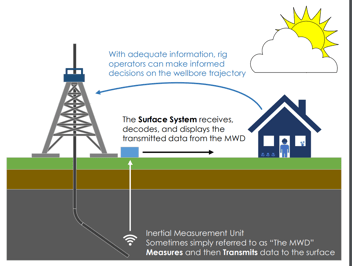

External Components

I would argue for the consideration of simulation of external components as well. Anything that connects thru a network, serial port, CAN bus, etc., can readily be replaced with a simulation that supports the connected protocol and provides a simulation of the behavior of the desired external component. They may represent components which would in reality be embedded systems. The simulation however can run on the desktop and have user interfaces to facilitate testing. Does not have to be pretty – just needs to be functional. The beauty of this approach is it can be used to test/validate the protocol between the two devices even before the external device exists. This approach does have issues:

- it takes time to implement the simulated component

- the simulated component may behave slightly differently (of course it will)

- will require maintenance as the project progress (especially if it is shown to be useful)

- should be done early on for maximum ROI (not really a con, but just an observation)

- the behavior of the component can always be questioned (true but this can be easily addressed and will result in a more robust system in the end)

It has benefits as well:

- it validates the protocol defined by the interface (which can be done before the external component exists.

- it may point out deficiencies of the defined protocol

- it allows integration of the component to be done in a real sense in the absence of the desired external component

- once the simulation is done, it will almost certainly have different performance aspects than that of the final system. This will highlight issues in the integration that may never otherwise be noticed.

- it can become a reference when interface questions come up(because its behavior may be more deterministic than the actual component)

- use of these simulations can allow work to be done remotely in absence of the actual hardware(can come in handy when implementing new features - you can completely implement new functionality with a high likely hood that it just works when you test with the complete system)

- these simulations can be made relatively complex with regard to their behavior (in the scenario where the simulated component would actually be firmware and the simulation is done on the desktop). This in itself can open up testing that could be difficult with the actual component.

- having a system that and be shown to work properly with the real component and one or more simulations will be more robust, since the relative performance of the two will likely be different. There a small argument for providing more than one simulation (ideally implemented by different parties) to further explore performance corners and unrealized exceptions.

As mentioned earlier this approach will involve some work but does not have to be pretty – it can start as a quick and dirty implementation. It will be heavily used in my experience.

Testing with Others(people)

Inevitably, we must work with other parties to help debug a system. This can be frustrating. Because you have tested and debugged your system and it works, right?

Of course, this is what everyone thinks. Things to keep in mind:

- keep an open mind - even though you cannot imagine a problem with your implementation, it is likely to be your problem 50% of the time (assuming peers of equivalent experience)

- be patient and listen. Even though you have scoped out the problem and have iron clad data to defend your point of view, the other party may have a different perspective.

- I have noticed that people have different methods(approaches) to debugging and you are not likely to make progress until the other party has had a chance to run thru his/her process.

- regardless of your confidence level, don’t start out with an adversarial approach. Enlist their help. Present your data and ask them to help you understand what it means. This is a substantial difference in approach.

- understand what your data means. You will have two types of data – one type is reproducible and can be easily studied. This is golden. The second type is anecdotal and may be near impossible to reproduce. This data is important, but you must be willing to discard it. Memory is funny - many times I have a strong recollection of events that just cannot be reproduced. Just be aware of this data, but you cannot defend it if it is in question.

- if the problem is even moderately complex, document all you can about it. This will give all parties something concrete to review. Many times, the right person reviewing this data can identify the source of the problem.

- when you are approached about a problem, be aware of your state of mind. Many times I have been told about a problem (the validity of which I had absolutely no reason to doubt). I look thru the code and/or hardware and what not and I cannot see the problem. Long story short, on many occasions, after having walked thru the setup so that I experience the problem firsthand, I have been able to go proceed directly to the cause of the problem. I wish I could explain this.

Test procedures

Product testing falls into two categories whether it is recognized or not:

- Functionality Testing – basically test components of the system or product for quantifiable behavior that can be categorized as pass/fail. Ultimately this is what all testing is about and is relatively straightforward.

- Design Validation – not sure that is the proper name, but this is essentially what happens when a relatively inexperienced party is instructed to test the system but does not have a formalized procedure. Testing will be done haphazardly on the components that are documented. Inconsistent results will be seen. Eventually unexpected interactions between components may be exposed. This is rarely done on purpose. It would be difficult to do formally since it would require an attempt to document the unknown/implied assumptions built into the system.

Usually the problems that would be exposed by what I refer to as ‘Design Validation’ are discovered by early adopters, beta testers and such. This is the likely scenario if the push is to get the product out the door quickly.

At any rate, actual testing is something that should not be performed by parties that are intimately familiar with the system under test. These parties ‘understand’ the unknow and implied assumptions built into as a system and have become blind to Design Validation issues. Ironically, any single person assigned to testing of a system will develop this blindness after a few rounds of testing as well.